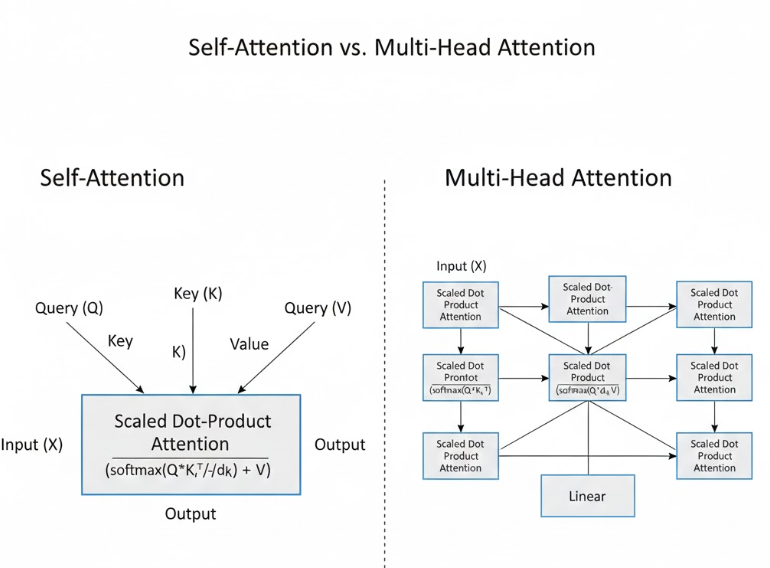

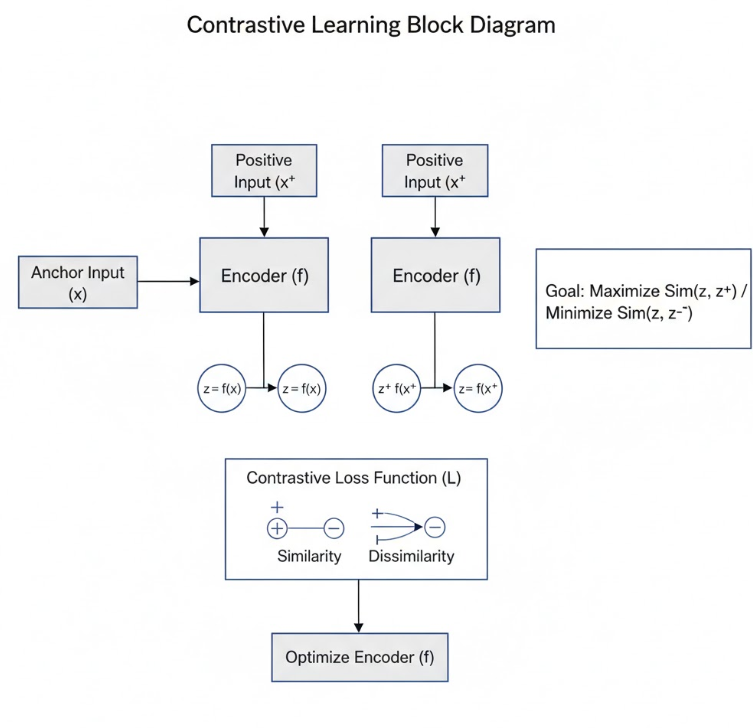

1.Demystifying Attention: Building Core Mechanisms of Transformers in PyTorch

A from-scratch PyTorch implementation of core transformer components including self-attention, masked attention, and multi-head attention following the seminal Attention Is All You Need paper.